It is believed that AI should be a tool to help cybersecurity teams work to catch malicious actors. However, the annual Devo SOC Performance Report™ states there’s still work to be done.

Artificial intelligence has been (and continues to be) a popular topic of discussion in areas ranging from science fiction to cybersecurity. The android humans envisioned by Asimov, Bradbury, Philip K. Dick, and other storytellers remain science fiction, artificial intelligence is real and playing an increasingly large role in many aspects of our lives.

While it’s interesting to watch the debate over the advantages and disadvantages of human-like robots with AI brains, a much more routine, but equally powerful form of AI is starting to play a role in cybersecurity.

The AI’s purpose is to be a reinforcing tool for hardworking security professionals. Security operations center (SOC) analysts are often overloaded by the never-ending number of alerts and notifications hitting the organizations’ security systems daily. Alarm fatigue has become an industry-wide cause of analyst burnout.

Ideally, AI could help SOC analysts keep pace with and stay ahead of well-educated and persistent threat actors who are using AI effectively for criminal or espionage purposes. Nevertheless, that doesn’t seem to be happening yet.

The Big AI illusion

Devo SOC Performance Report™ is a survey of 200 IT security professionals aimed to determine how they feel about AI. It covers AI implementations that address a range of defensive disciplines including threat detection, breach risk prediction, and incident response/management.

Myth #1: AI-Powered Cybersecurity is Already Here

All survey respondents state their organization is using AI in one or more areas. The most common usage area is IT asset inventory management, followed by threat detection (which is good news), and breach risk prediction.

But in terms of leveraging AI directly in the battle against threat actors, it’s too early to say at this point. Some 67% of survey respondents say their organization’s use of AI “barely scratches the surface.”

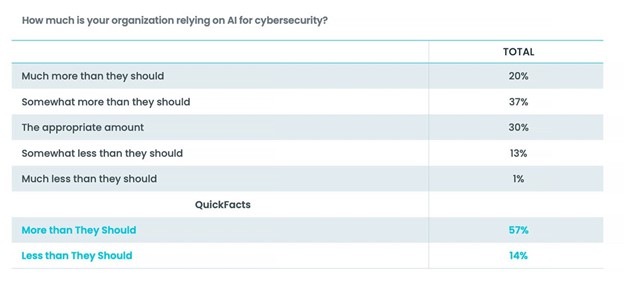

Here is how respondents feel about their organization’s reliance on AI in their cybersecurity program.

More than half of respondents believe their organization is — at least currently — relying too much on AI. Less than one-third think the reliance on AI is appropriate, while a minority of respondents believe their organization isn’t doing enough with AI.

Myth #2: AI Will Solve Security Problems

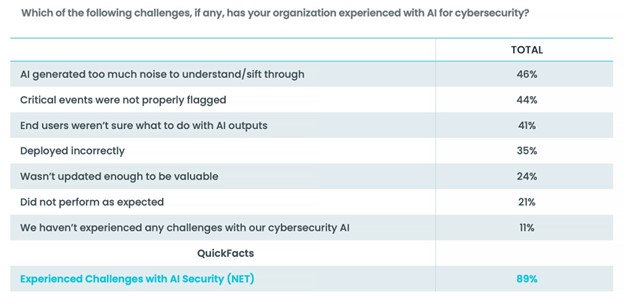

When asked for their thoughts about the challenges posed by AI use in their organizations, respondents weren’t shy. Only 11% of respondents said they haven’t experienced any problems using AI for cybersecurity. Most respondents see things quite differently.

When asked where in their organization’s security stack AI-related challenges occurred, core cybersecurity functions were mentioned. While IT asset inventory management was the top AI problem area, according to 53% of respondents, three cybersecurity categories were listed below:

- Threat detection (33%)

- Understanding cybersecurity strengths and gaps (24%)

- Breach risk prediction (23%)

It’s interesting to note that incident response was cited by far fewer respondents (13%) for posing AI-related challenges.

Myth #3: AI is Intelligent, so It Must Be Effective

It seems evident that while AI already is being used in cybersecurity, the results are mixed. The AI Big Lie is that not all AI is as “intelligent” as the name implies, and that’s even before accounting for mismatches in organizational needs and capabilities.

The cybersecurity industry has long been seeking ‘silver bullet solutions. AI is the latest one. Organizations must be deliberate and results-driven in how they evaluate and deploy AI solutions. Unless SOC teams combine AI with experienced experts dived into the technology, they risk failure in a critical area with little to no room for error.

Source: Devo